As AI integrates deeper into our lives and businesses, defending against adversarial machine learning threats becomes a top priority. The NIST has analyzed these threats, offering vital insights for AI system security. This article breaks down the “adversarial machine learning threats unpacking the NIST findings”, preparing you to fortify your AI against emerging adversarial tactics.

Key Takeaways

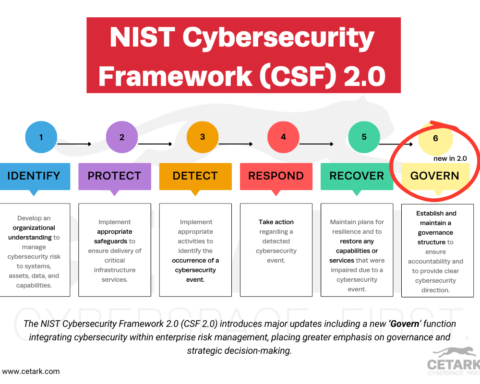

- The NIST Framework (AI RMF) provides a voluntary guideline for integrating trustworthiness in AI design, development, and usage. At the same time, the NIST report emphasizes the systematic management of AI risks. It recognizes the increasing sophistication of adversarial machine learning threats such as bias, evasion, and poisoning attacks.

- Adversarial threats to AI systems include poisoning attacks, which compromise training data; evasion attacks, which manipulate the system response; and privacy and abuse attacks which exploit sensitive information. AI protection involves adversarial training, algorithm and data security, and constant monitoring and adaptation.

- Future AI security challenges will involve a rise in adversarial threats such as data poisoning and the use of AI in cyberattacks. In preparing for this, AI developers must focus on resilience, stakeholder collaboration and adaptability, while continuous monitoring and strategic adaptation will be crucial to tackling advancing threats.

Exploring the NIST Framework on AI Security

Our pursuit of securing AI systems has led us to the National Institute of Standards and Technology (NIST) as a beacon of guidance. The NIST Framework on AI Security, also known as the AI Risk Management Framework (AI RMF), presents a voluntary guideline for addressing AI risks alongside others, such as cybersecurity and privacy.

The framework offers a systematic approach to integrating trustworthiness into the design, development, and utilization of AI systems.

The Role of the National Institute in AI Safety

The National Institute plays a critical role in promoting AI safety. Its primary goals include enhancing the trustworthiness of AI technologies and advocating for safe and dependable AI systems. To this end, the NIST AI Risk Management Framework provides guidelines to ensure responsible AI systems, emphasizing attributes such as validity, reliability, safety, and others.

The impact of NIST is not limited to guidelines and frameworks. Its influence extends to the broader development and application of AI technologies, advocating for the creation and utilization of reliable and ethical AI systems. The institute also actively collaborates with organizations and institutions to advance AI safety further.

Understanding Adversarial Machine Learning

Adversarial machine learning, a complex field, scrutinizes the assaults on machine learning algorithms and devises strategies to counter these attacks. These attacks involve:

- introducing subtly altered inputs

- misrepresented data during the training phase

- influencing AI systems

- inducing erroneous or harmful outcomes.

Examples of adversarial attacks are diverse, including:

- Tampering with input data to induce misclassification

- Evasion attacks

- Data poisoning

- Model extraction

With artificial intelligence becoming an integral part of our lives, comprehending these threats becomes vital for the safety and effectiveness of AI systems.

Key Findings from the NIST Report

The NIST report on AI security has shed light on several key issues, including:

- The acknowledgment of bias in AI-based products and systems as a fundamental component of trustworthiness

- The need for a structured approach to manage AI risks

- The recognition that AI bias can stem from human biases

The report sheds light on the adversarial threats to AI systems, emphasizing attacks such as evasion and poisoning and threats specific to various modalities like computer vision.

To counter these threats, the report recommends a structured approach to adversarial machine learning and offers a taxonomy and terminology related to attacks and potential mitigations.

The NIST report provides valuable insights for developing AI defense strategies. It offers recommendations for securing AI systems against attacks, from identifying and mitigating cyberattacks that can manipulate AI behaviour, to developing defences against complex adversarial AI tactics. These findings underscore the importance of recognizing ‘adversarial machine learning’ threats, providing perspectives on mitigation strategies, and emphasizing the constraints of current approaches.

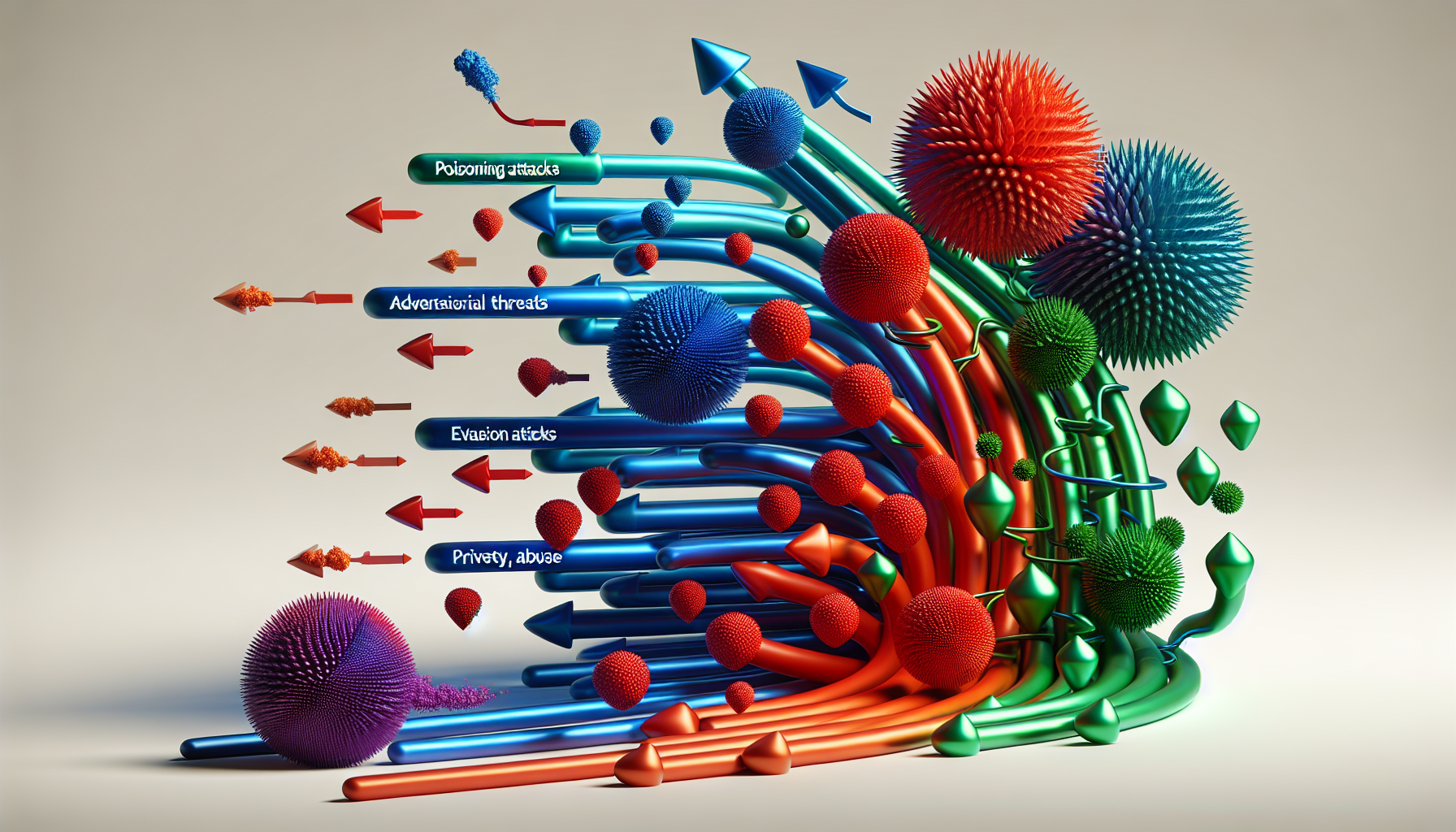

The Spectrum of Adversarial Threats

Adversarial threats to AI systems span a broad spectrum. Included in this range are poisoning attacks, which involve introducing false or misleading data into the training dataset, potentially influencing the model’s predictions or classifications. Real-world examples of such attacks have shown how an attacker tampering with the dataset used to train a social media platform’s content moderation AI could manipulate the AI’s behaviour.

Evasion attacks represent another type of adversarial threat. These attacks cause AI to fail by altering the input in order to manipulate the system’s response, resulting in misinterpretation or misclassification of the input.

Poisoning Attacks: Tainting the Training Data

Poisoning attacks pose a significant risk in the realm of AI security. These attacks involve:

- The deliberate manipulation or introduction of malevolent data into the training set of an AI system

- They can be carried out by external perpetrators or insiders who have access to the training data

- The objective of poisoning attacks is to mislead the AI system and induce it to generate inaccurate or prejudiced outcomes.

The potential implications of poisoning attacks on AI models and systems are significant. They can lead to:

- The dissemination of biased, unjust, or hazardous results

- Compromise the accuracy of machine learning models

- Heighten the susceptibility of AI models to adversarial attacks

Therefore, understanding and mitigating such attacks is an essential part of AI security.

Evasion Attacks: Deceiving AI to Fail

Evasion attacks add another layer of complexity to the AI security landscape. These attacks mislead an AI system by modifying input data, causing it to make erroneous decisions or alter its response to the input, ultimately leading to system failure. The predominant technique in evasion attacks involves the meticulous design of inputs that exhibit normal characteristics to humans, yet have the capability to mislead AI models, causing them to make errors.

There have been real-world examples of evasion attacks, such as using specially crafted stickers to deceive the AI of a self-driving car, leading it to misinterpret traffic signs or road conditions. The consequences of successful evasion attacks on AI systems can include inaccurate outputs or compromised functionality, varying severity depending on the application.

Privacy and Abuse Attacks: Exploiting Sensitive Information

Privacy and abuse attacks exploit sensitive information within AI systems. Privacy attacks aim at extracting sensitive information about the AI or its training data, while abuse attacks involve the insertion of false information, which can manipulate the AI system’s behavior.

Sensitive information in AI systems, including confidential information, can be exploited through various methods, such as model inversion attacks that aim to recreate input data from the model’s output, and backdoor attacks, which involve hidden triggers that can cause intentional misbehavior.

The potential negative consequences of privacy and abuse attacks include:

- Unauthorized access or disruption of infrastructure

- Significant privacy implications

- The possibility of exploitation of personal data, resulting in various harmful effects.

Protecting AI Systems: Strategies and Challenges

Given these threats, the protection of AI systems escalates to a primary concern. Adversarial training, a crucial method for safeguarding AI systems, exposes AI models to various adversarial examples during their training phase to bolster their resilience.

Responding to adversarial examples involves implementing strategies such as adversarial training, which incorporates adversarial examples into the training data to educate the AI system in recognizing and accurately classifying such instances, thereby strengthening its defense mechanisms.

Securing Algorithms and Data

Ensuring the security of algorithms and data constitutes a critical component of AI security. It aids in preventing unauthorized access to sensitive information and reduces the risks associated with insider threats. Efficient strategies for securing AI algorithms include:

- Data encryption

- Adversarial learning

- Privacy-preserving learning

- Design of secure and privacy-preserving AI systems

To safeguard data in AI systems, it’s essential to implement best practices such as:

- Limitation and purpose specification

- Fairness

- Data minimization and storage limitation

- Transparency

- Privacy rights

- Data accuracy

Additionally, compliance with data security regulations is crucial for ensuring data protection.

Encryption enhances the security of data in AI systems by transforming sensitive information into an unreadable format that is only accessible and understandable by authorized individuals.

Responding to Adversarial Examples

The AI defense heavily relies on the response to adversarial examples. Adversarial training, which involves training models with adversarial examples, is widely regarded as the most effective current defense strategy.

Mitigation of adversarial examples in AI systems can be achieved by implementing techniques such as introducing noise or filtering into the input data to disrupt adversarial perturbations while maintaining essential features, and by incorporating adversarial samples into the training dataset to enhance the system’s resilience. However, these methods may result in decreased accuracy in classifying authentic samples, suggesting a trade-off between security and performance.

Ongoing Challenges in AI Defense

Even with these strategies in place, the AI defense still grapples with persistent challenges. The changing nature of adversarial threats necessitates AI developers to consistently strengthen their defenses and remain one step ahead of adversaries who are introducing new algorithms and innovations.

There exist unresolved challenges in the identification of adversarial attacks against AI, which involve the enhancement of model resilience through defense mechanisms and the improvement of detection methods. Further, the challenges that impede the continuous enhancement of AI security measures encompass secure data collection, storage, and processing, robust data validation and cleansing, routine updates, false positives, adversarial attacks, lack of explainability, resource requirements, ethical concerns, and the necessity to implement robust security measures to mitigate risks and safeguard sensitive data.

Best Practices for AI Developers

In the midst of these challenges, AI developers must follow best practices for securing AI systems. These practices involve thoroughly considering multiple factors to guarantee robustness, adaptability, and dependability. Developers should also encourage stakeholder collaboration by maintaining regular and transparent communication and promoting co-creation to develop collaborative solutions.

Developing Resilient AI Models

The creation of resilient AI models forms an essential facet of these best practices. This involves:

- Adapting to circumstances and rebounding swiftly

- Engineering conditions that encompass the entire solution space and feasible scenarios

- Crafting interpretable AI models to detect biases and vulnerabilities

- Harnessing AI algorithms to anticipate potential crises and assist in devising timely response plans.

Adversarial training plays a crucial role in enhancing the resilience of AI models by subjecting them to adversarial examples during the training phase. This process facilitates the models in acquiring the ability to recognize and counteract manipulations, thereby increasing their resilience against attacks in real-world situations.

Fostering Collaboration Amongst Stakeholders

Another significant practice is the cultivation of collaboration amongst stakeholders. The NIST framework can be utilized to encourage collaboration in AI security by offering a voluntary resource for organizations to oversee the risks associated with their AI systems and to facilitate collaboration with the private and public sectors.

Successful examples of stakeholder collaboration in AI defense include embedding responsible research and innovation-aligned, ethics-by-design approaches, and analyzing an envisioned C2 system using A Method for Ethical AI in Defence.

Continuous Monitoring and Adaptation

AI security necessitates continuous monitoring and adaptation. It involves:

- Ongoing surveillance and analysis of an organization’s IT infrastructure

- Identifying and responding to threats in real-time

- Reducing the risk of successful cyber attacks

- Improving the overall security posture.

Continuous adaptation in AI security entails AI systems consistently acquiring knowledge from new data and revising their models or algorithms to handle advancing adversarial threats effectively. The essential elements of a comprehensive monitoring system for AI encompass:

- Model performance monitoring

- Data drift monitoring

- Outlier detection

- Model service health monitoring

These components are crucial in identifying and addressing emerging risks impacting production models and data.

Case Studies: Adversarial Attacks in the Wild

These threats are vividly demonstrated through real-world examples of adversarial attacks. In one such case, researchers have showcased how adversarial examples have the capability to deceive driver assistance systems, causing them to misinterpret traffic signs. Other techniques utilized in adversarial attacks include:

- Evasion attacks

- Data poisoning attacks

- Byzantine attacks

- Model extraction

- The creation of adversarial examples to deceive and undermine AI systems.

Highlighting Notable Incidents

Several notable incidents underscore the reality of these threats. For instance, researchers have managed to trick driver assistance systems in the automotive sector into misreading traffic signs, and have manipulated other systems using basic stickers on signs, resulting in hazardous driving choices like veering into traffic or obstacles.

These attacks are often carried out by various attackers, including cybercriminals involved in activities such as spreading fake news, money laundering, and committing computer crimes. The types of AI systems often the focus of adversarial attacks are typically those intended for image classification tasks, where the impact of misleading the AI can be especially serious.

Lessons Learned and Applied Strategies

The lessons learned from these incidents have helped shape the development of AI security strategies. Insights gained from previous adversarial attacks have underscored the importance of allocating resources to AI-driven cybersecurity solutions for the efficient identification, mitigation, and counteraction of complex and rapidly evolving cyber threats.

Strategies to mitigate future AI security threats involve:

- Prioritizing control of generative AI attacks

- Enhancing the development process to minimize the likelihood of data breaches and identity theft

- Continuous monitoring

- Tracking performance

- Regular updates

- Adversarial training

These are some of the strategies to be considered.

The Future of AI Security

An upsurge in potential threats is expected to characterize the future of AI security. The emerging challenges in AI security encompass data poisoning, SEO poisoning, and the involvement of AI-enabled threat actors.

As AI and ML technologies become more integrated into cyberattacks, the landscape of AI security is expected to transform.

Emerging Threats and Predictions

Moving forward, the challenge of adversarial machine learning will persist. The emerging challenges in adversarial machine learning encompass:

- Evasion attacks

- Poisoning attacks

- Attacks on various modalities such as computer vision, natural language processing, speech recognition, and tabular data analytics.

It is anticipated that adversarial attacks will continue to evolve and escalate in the future, in tandem with the advancement of AI technology and the evolution of economic interests.

Preparing for Tomorrow’s AI Landscape

Given these emerging threats, it is essential to adapt and evolve our AI security measures accordingly. In order to ready oneself for forthcoming AI security challenges, it is advisable to:

- Evaluate the trade-offs between safety and security

- Secure generative AI and ChatGPT sessions

- Monitor for new attack vectors

- Take proactive steps to identify and address vulnerabilities

- Explore the use of blockchain for data security

- Incorporate human oversight

- Establish pertinent regulations.

Summary

In conclusion, the field of AI security is a rapidly evolving landscape, marked by the emergence of new threats and the constant need for adaptation. Adversarial machine learning poses unique and complex challenges, and understanding these threats is a crucial part of maintaining the reliability and effectiveness of AI systems.

The importance of securing AI algorithms, protecting AI systems, and staying ahead of evolving adversarial threats cannot be overstated. As we move forward, continuous improvement and collaboration among stakeholders will be paramount in developing robust and resilient AI systems. The future of AI security may be marked by new challenges, but with vigilance, collaboration, and continuous adaptation, we can strive to stay one step ahead.